CASE STUDY

AI + Live Agent

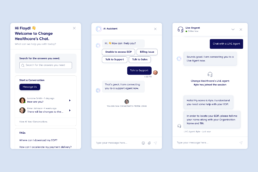

Designed a hybrid conversational system that balanced automation with empathy, reshaping how healthcare providers access critical payment data. Proved that AI can amplify the human experience when designed with understanding, not just intelligence. Powered by LLM-driven intent recognition for faster, more natural resolution.

Bridging automation and empathy in healthcare communication

This project started with a human problem, not a technical one. Healthcare providers were overwhelmed with calls to access Explanation of Payments (EOPs). I led the design of an AI + Live Agent hybrid system that paired automation with empathy. We co-created the conversation architecture with agents, validated it with providers, and refined it until it felt as intuitive as talking to a trusted colleague.

Outcomes

- 90% reduction in daily support calls

- 40% faster handling time

- 18% increase in sales from improved self-service adoption

We focused on

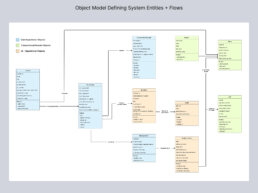

- Object modeling as the foundation —aligning product + engineering, informing data structure, ensuring ethical, bias-aware system behavior, and guiding conversation logic.

- Mapped top provider journeys and identified friction in accessing EOPs.

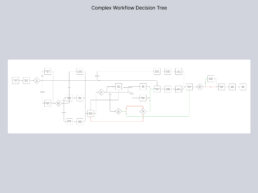

- Designed conversational flows powered by LLM intent recognition, with seamless escalation to Live Agents for edge cases.

- Designed conversational flows that blended automation with seamless agent handoff.

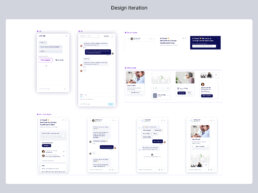

- Developed natural-language prototypes tested with real providers to ensure tone, trust, and clarity.

- Expanded beyond EOP retrieval to billing, sales, and support use cases using the same AI+Agent system.

- Embedded learning loops with engineering to improve intent recognition over time.

What helped

- Co-design sessions that kept the human voice at the center of every AI decision.

- Cross-functional commitment between Product, Engineering, and Customer Support to blend machine capability with emotional intelligence.

- Rapid iteration cycles and in-the-field testing with real providers.

The work behind the results

From journey mapping and conversation architecture to LLM intent design, object modeling, flows, wireframes, prototyping, and usability testing, the work that made automation feel human.

What I learned

This project reinforced that conversational AI isn’t just about flows or intent matching; it’s about designing a system in which human judgment, AI capability, and business outcomes remain in balance.

Object modeling became central to the work. By defining clear entities, actions, and relationships, we aligned product and engineering, reduced ambiguity, and created a data structure that supported scalability, bias awareness, and ethical guardrails from the start. Designing around LLM-based components made it clear how UX decisions directly influence model behavior, error handling, and user trust.

Co-designing with live agents helped ground automation in reality, clarifying where AI could confidently scale and where human-in-the-loop support was essential. The biggest takeaway: effective AI systems don’t replace people, they responsibly extend human expertise, delivering measurable impact through trust, adoption, and performance.