CASE STUDIES

Designing for Impact & Growth

The case studies below showcase my hands-on leadership across design strategy, systems thinking, and execution — from concept to launch. Select case studies are shared in context based on the relevance of the opportunity.

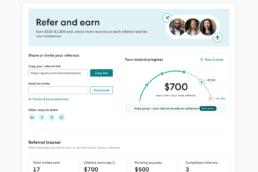

Growth Referrals Journey

Redefined how users discover, share, and earn through referrals by designing a system grounded in motivation and timing — not marketing gimmicks. A data-led, human-centered redesign that turned a stagnant referral program into a self-sustaining growth engine.

Digital Marketplace Platform

Designed an enterprise-scale marketplace that brought order, speed, and trust to complex B2B transactions. Translated operational chaos into a unified buying experience for providers and payers, accelerating revenue and strengthening cross-functional alignment.

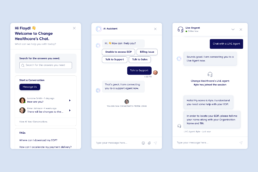

AI + Live Agent

Designed a hybrid conversational system that balanced automation with empathy, reshaping how healthcare providers access critical payment data. Proved that AI can amplify the human experience when designed with understanding, not just intelligence.

From Insight to Impact

The gap between understanding users and shipping value isn’t about research volume; it’s about translating insight into decisions that matter. Most teams collect data but struggle to act on it with confidence. The difference comes down to knowing what’s actionable, what’s ambiguous, and what deserves deeper validation before committing resources.

Great products emerge when insight drives prioritization, not just documentation. This means balancing speed with rigor: running lean discovery when the cost of being wrong is low, investing in depth when the stakes are high, and always connecting what we learn back to measurable business outcomes. The goal is building products people trust and adopt through repeated use, not just features that test well in isolation.

My philosophy

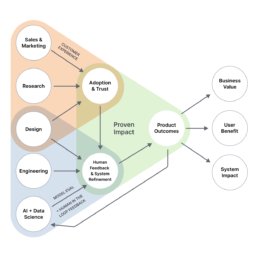

How I Design for AI-Driven Product Success

Whether it’s a startup product racing toward product–market fit or an enterprise platform built for scale, great products don’t succeed just because they function. They succeed when they’re shipped, adopted, trusted, and continuously improved.

This visual reflects how I approach building AI-driven systems responsibly: combining human insight, model behavior, engineering rigor, and feedback loops that keep improving performance long after launch.

For me, intelligent products should not only work, they should learn, adapt, and deliver measurable value for both people and the business.

How I Work

Design Strategy

Ground every decision in purpose and evidence.

• User-centered approach

• Data-informed insights

• Competitive analysis

• Cross-functional alignment

• Prioritization tied to outcomes

AI/ML Product Design

Design systems that learn, adapt, and perform.

• System design + data design

• Model evaluation and refinement

• Human-in-the-loop validation

• Responsible AI principles

• Scalable patterns for AI-driven workflows

Art of the Possible

Bring ideas to life fast and refine through proof.

• Rapid prototyping

• User testing

• Feedback loops that de-risk decisions

• Iterative design

• Continuous experimentation

Manish brings clarity and structure to ambitious, cross-functional work. His ability to frame ambiguity, connect design to business outcomes, and drive alignment across teams makes him an invaluable design leader.Kelly Griggs, Head of Design for Growth, Gusto (via LinkedIn)

Manish’s passion for elevating user experiences is contagious. His innovative thinking, cross-functional collaboration, and relentless drive for quality transformed how we design and deliver digital experiences.Katie Bradley, Staff VP of Tech User Experience at Elevance Health (via LinkedIn)

Manish brings teams together around what truly matters—creating meaningful, business-aligned user experiences. His leadership and perspective made design thinking an integral part of our product strategy.Smriti Anand, Sr. Product Manager, Change Healthcare (via LinkedIn)

Manish inspires cross-functional teams to see the bigger picture—crafting delightful end-to-end experiences grounded in research and strategy. He brings vision, clarity, and collaboration to every stage of the product lifecycle.Emilie Lostracco, Sr. Manager, UX Research, Change Healthcare (via LinkedIn)

Manish listens with empathy, finds common ground, and designs with purpose. His ability to connect business, marketing, and user needs makes him a transformative design leader.Nicole Bateman, Marketing Executive, Elevance Health (via LinkedIn)

Manish combines curiosity with discipline—blending data, design, and experimentation to uncover insights and drive measurable business outcomes.Amy Smessaert, Experimentation Lead, Change Healthcare (via LinkedIn)

©2026 Duanamic | Manish Dua. All Rights Reserved.